There are many accounts on social media networks that are sharing misinformation and false news. To reduce this Facebook has decided to take action against these user accounts.

Facebook and other social media networks are growing tremendously with new users. Many news publication houses have started sharing news through social media to engage and attract more views.

Many accounts use this popularity for their own motives. Facebook and other social media sites like Twitter and Instagram have seen a rise in sharing misinformation and false news to create unrest in society.

Facebook on its blog, have shared that it will be taking action against people who repeatedly share misinformation on its platform.

Also read: How to make your Facebook post shareable.

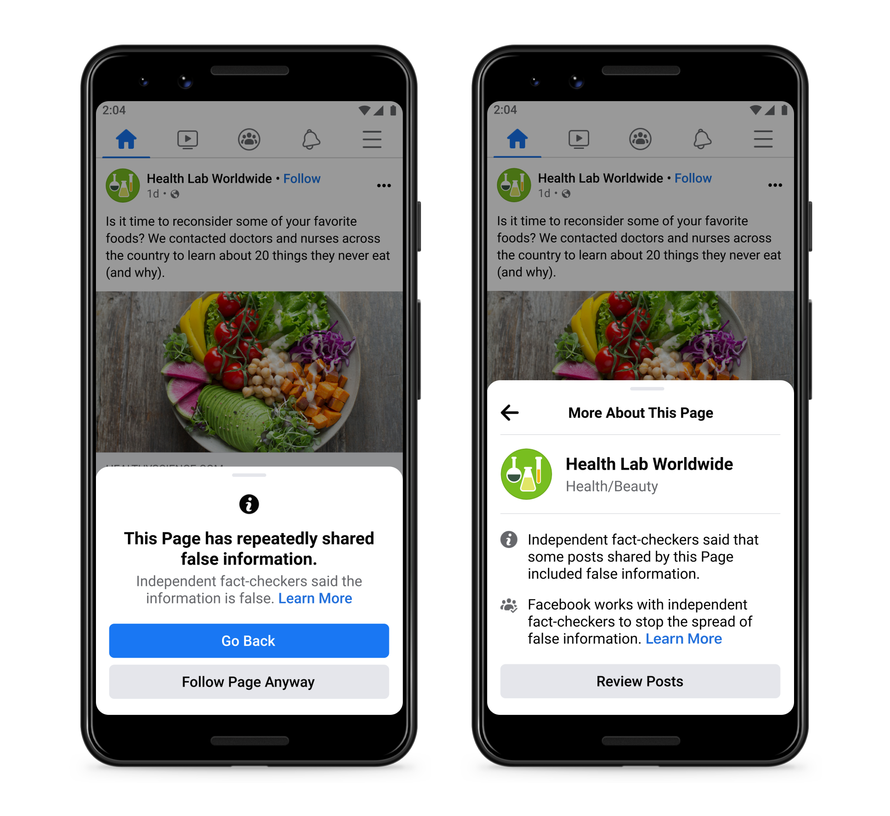

The social network giant will notify readers of the news that the content they are viewing comes from a page that has been rated false by one of the independent fact-checker partners of the site.

This will reduce the reach of the user sharing misinformation news on the site repeatedly. And this will lead to reduction in the distribution of posts on the news feed.

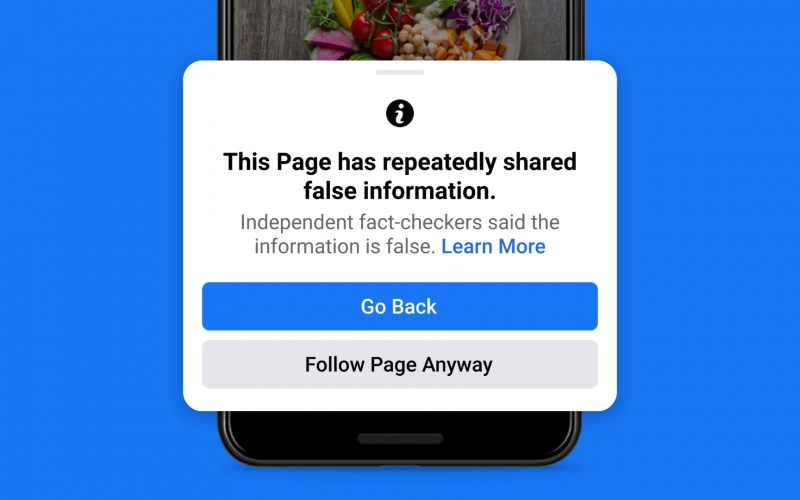

“We want to give people more information before they like a Page that has repeatedly shared content that fact-checkers have rated, so you’ll see a pop up if you go to like one of these Pages,” Said Facebook.

This will lead to other users on the platform making an informed decision of whether they want to like the page and promote it further or not. This will help reduce sharing of misinformation on Covid-19 and other sensitive topics.

Facebook will reduce the distribution of all posts in News Feed from a users account if they repeatedly share content that has been rated by one of the site’s fact-checking partners. Which will make them less visible to other readers.

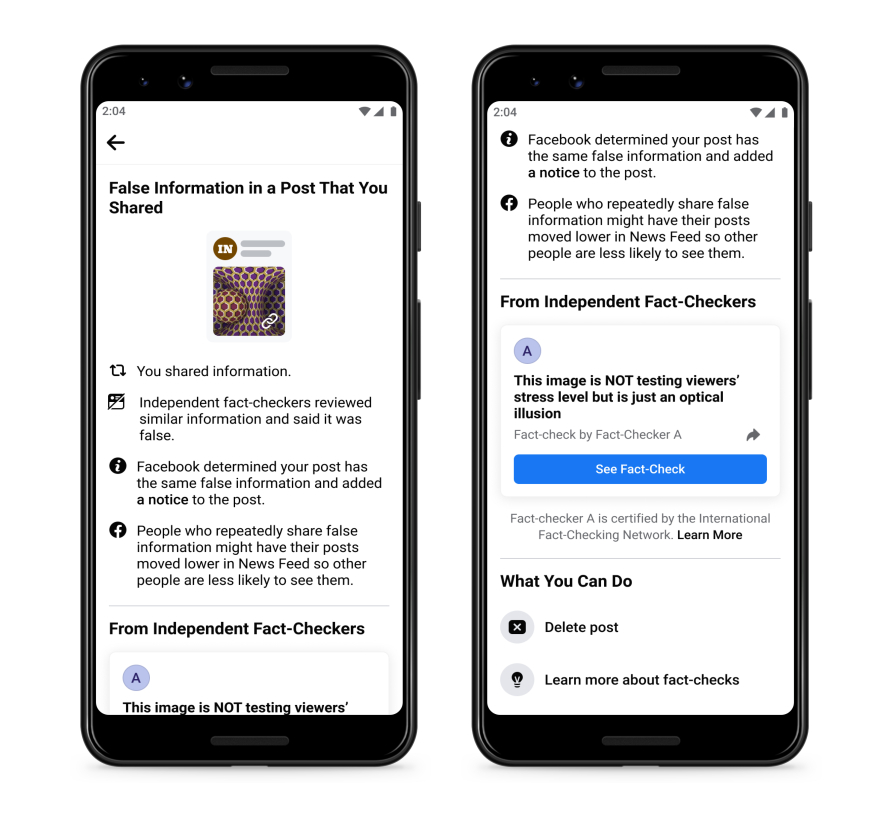

It will also notify users who have shared the post earlier and if the post has been rated by the fact-checker later, with a custom notification that the post the user has shared is a piece of false news and the user can either take an action to delete the post or learn more about the fact-checking program.

Facebook has been continuously introducing new ways of reducing the sharing of misinformation and false news since the launch of its fact-checking program in 2016. Not only Facebook but other social media sites are introducing new features to curb misinformation.

Not only social media sites should try restricting the spread of false news but the users as well. We have to be aware of the news we are sharing with others and fact check beforehand.

In what ways do you suggest that these social media networks should use to reduce false news, let us know in the comments below and do share this article with others.

Until next time,

Chao 🙂